Will Superintelligent AI Ignore Humans Instead of Destroying Us?

J Thoendell stashed this in AI

Source: http://motherboard.vice.com/read/will-su...

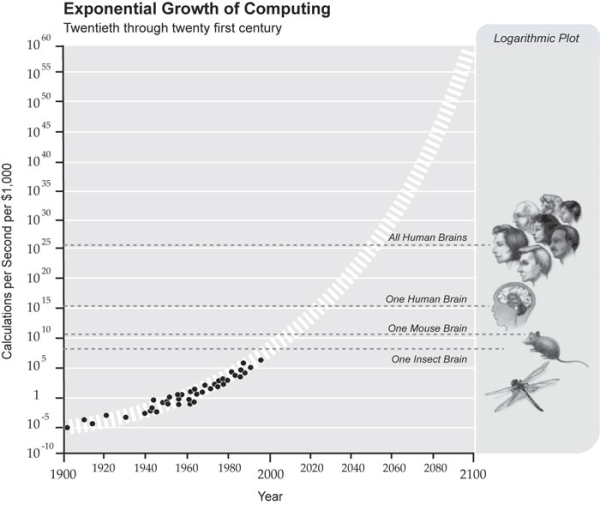

We’re marching toward the singularity, the day when artificial intelligence is smarter than us. That has some very smart people such as Stephen Hawking and Elon Muskworried that robots will enslave or destroy us—but perhaps there’s another option. Perhaps superintelligent artificial intelligence will simply ignore the human race.

On its face, it makes sense, doesn’t it? Unless you’re some sort of psychopath, you don’t purposefully go out and murder a bunch of insects living in the great outdoors. You just let them be. If AI becomes much, much smarter than us, why would it even bother with interacting with us lowly humans?

That interesting idea is put forward in a blog post by Zeljko Svedic, a Croatian tech professional who started a company that automatically tests programming skills. Svedic is quick to point out that he’s not an artificial intelligence researcher and is something of an amateur philosopher, but I was fascinated by his post called “Singularity and the anthropocentric bias.”

Svedic notes that most interspecies conflicts arise out of competition over resources or out of what he calls irrational behavior for the long-term survival of a race. Destroying the Earth with pollution probably isn’t the smartest thing we could be doing, but we’re doing it anyway. He then suggests that a superintelligent race of artificial intelligence is likely to require vastly different resources than humans need and to be much more rational and coldly logical than humans are.

Stashed in: Turing, Singularity!, @elonmusk, AI, The Singularity, Stephen Hawking, Vice, Ex Machina, Bad Robot!, Artificial Intelligence

True.

Except even in that ignoring and ignorance of human viability priorities super strong AI possesses a broad probabilistic range for destroying human livable habitat in a logically efficient way towards harvesting their resource needs from Earth ... such as AI driven global climate change for more effective solar energy capture (e.g. machines don't need atmosphere and climate – in fact it's in the way of solar capture efficiencies and installation security).

Those kind of AI ignoring us possibilities make the anthropogenic mess we've created for ourselves now look like quaint and pleasant options.

I'd rather the drama of all out war than the whisper humming crush of inescapable of oblivion made real by a gussied up toaster that's smarter than everyone I know.

AI driven global climate change for better solar energy capture is a frightening idea, Rob.

Apologies Adam, not trying to scare anyone... I just look at things and talk out loud, like always – pargraph length Tourette's

Me too. It was just something I had not even considered as a possibility.

9:59 AM May 26 2015