How (And Why) I'm Circumventing Twitter's API Instead of Using It

Lucas Meadows stashed this in Web

Stashed in: PandaWhale, Twitter!, JavaScript, Code, API, Twitter, Software!, Awesome, Sticking it to The Man!, Web Development, The Matrix, APIs, Impossibru!, Ain't Nobody Got Time For That, @lindsaylohan, Hacker News!, dev talk, @lmeadows, Beavis and Butthead, Pacman!

I still like using Twitter. Though I have often complained about their shitty API, I still have respect for the product and the company. The story of Twitter is a valuable case study. From its humble beginnings as Twttr -- an idea so simple and crude that most people casually dismissed it -- to the hugely popular and valuable service we know today. It's a story of a team that persisted, improved its design, and kept pimping the product until it became viable.

I believe that the API contributed hugely to Twitter's growth, which was really kind of miraculous since nothing about it was particularly well-designed. For one thing it has always been pretty fail-y, even for OAuth. And rather than scaling the service and improving availability, Twitter has consistently fallen back on trying to limit API usage by enacting restrictive policies and rate limits. It's also pretty clear that Twitter never invested much time or thought into refining the API to be a good general resource for developers building arbitrary apps. Rather, the API was built more or less directly out from the same backend infrastructure of the website itself. You have to do significant post-processing of data to get it out of a form that's useful for anything other than replicating the core Twitter experience (which they famously don't want you to do). Couple that with the fact that they also don't want you to deviate too far away from the "core Twitter experience" and you have one fairly fucktarded API.

But Twitter has so much awesome data that many devs (including me) were willing to work through the many, many problems with Twitter's API. I've implemented several Twitter-based features on PandaWhale which might have been great cross-platform experiences, but between the constant failures, the rate limits, and the fact that Twitter may cut off API access at any time, Adam and I have decided to take a different route: circumventing the API and fetching the data through what you might call "less official" means.

The first plan we cooked up was to make an underground Twitter API. With the help of 1,000 or so Mechanical Turk helpers we'd create thousands of Twitter apps (i.e., OAuth API key pairs) and tens of thousands of fake users to use these apps so that our servers could consume the bot's feeds and aggregate them into a pseudo-firehose. We figured that if we had ~50K bot user users following the top 250K authors on Twitter (defined by follower counts)(human or bot) then the aggregated home page feeds of the bots would represent the bulk of the useful data on Twitter.

Of course we knew that Twitter would try to shut us down, but they would be forced to play the game of trying to track down which API key pairs and accounts were being used, and with enough help Mechanical Turk churning out new dummy apps and users, EC2 giving us new IP addresses, and perhaps even real people "donating" their feeds to us by adding one of our read-only apps we felt confident that Twitter could never shut us down. And we'd make the underground firehose available for free to anyone who wanted to develop Twitter apps. Pretty cool, huh?

The problem is that this plan is overly complex and expensive. My work on the PandaWhale bookmarklet taught me that the best way to scrape data from the big "permalink machine" consumer web sites (Twitter, FB, Tumblr et al.) is with Javascript in a browser context. Sites are DOM. Javascript is DOM. HTTP server-based scraping has a rich and noble history, but doesn't work well in this brave new world of session-based feeds rendered with Javascript. But all those convenient programming hooks and DOM node patterns that some dev at Twitter created to make his job easier can (and should!) also be exploited by me to scrape the site. APIs are ultimately just a way to shape scraping behavior of third-party developers by giving them a blessed way to scrape your site. If you have compelling data and your API is a turd, you're asking to be scraped. That's the web.

So we can't use Twitter's API due to a fatal combination of poor technology and poor policy, and for security reasons script injection only works on an ad hoc basis in response to a user action, like in a bookmarklet or browser extension. So how do we take advantage of script injection in an effective and ethical way? We already can (and do) let users stash tweets with our bookmarklet, just in case they want to have some prayer in hell of finding the tweet again in a week's time. But, while useful to the user, this approach doesn't let us do some pretty basic things like stash the @-replies to the tweet which happen after it was stashed. Note that this is often not even possible through the API, since if a user is trying to save someone else's tweet (and the author is not a PandaWhale user) then we can't parse the author's entire @-reply history to find those few replies which were in response to the tweet. It's amazing that after all this time the API doesn't even have a way to fetch @-replies given a tweet id, and we're forced to resort to something as barbaric as parsing entire @-reply histories (side note: this inefficiency puts extra load on Twitter's servers and eats up rate limits -- a little bit of refinement to the API would help everyone a lot in this case).

So I took this idea a step further and decided that the best way to deal with Twitter was not to use their API nor create an underground one, but rather to run a headless browser like PhantomJS on a dedicated server. I can input some sockpuppet Twitter creds (hell, I could even input my own Twitter creds) and I've got a valid Twitter session running on a browser in the cloud. Any time PandaWhale needs to fetch additional data from Twitter (like to get @-replies to a tweet which a user stashed a few hours ago) I can just have my cloud browser navigate on over to the tweet's permalink, expand it, and look to see if there are any new replies -- much as I would if I were doing it manually for myself. I've done away with the pain of OAuth credentials. I've done away with the development pain (and the business risk) of relying on Twitter's API.

I believe that this way everyone will be happier, and all Twitter apps should do this. Twitter gets reduced loads on its servers and doesn't need to maintain its API anymore, devs get to roll out awesome features more easily and more effectively, and users get more reliable & performant apps which won't go belly up in a week when Twitter revokes their API keys :)

And perhaps we can stop becoming a web of apps and go back to being a web of links.

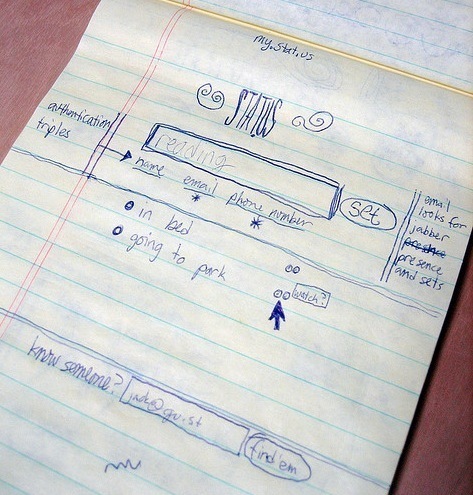

First of all, this is brilliant:

The best way to deal with Twitter was not to use their API nor create an underground one, but rather to run a headless browser like PhantomJS on a dedicated server. I can input some sockpuppet Twitter creds (hell, I could even input my own Twitter creds) and I've got a valid Twitter session running on a browser in the cloud...

My question is why Twitter would even want to maintain an API anymore.

The headless browser technique respects the Twitter terms of service but doesn't force developers to suffer through an unreliable, rate-limited API.

It's a win for everyone, IMHO.

Do not try and bend the rate limit. That's impossible. Instead... only try to realize the truth.

Not when you run PhantomJS in the cloud, baby!

The PhantomJS ghost looks like he wants to take out Pacman.

Bonus points for The Matrix and Lindsay Lohan.

The limit does not exist!!!

Well, the limit shifted. As one of my esteemed colleagues says, it's still just scraping at the mercy of the page layout.

An API is just a social contract that the company agrees to keep things backwards compatible for a long time.

Given that Twitter has changed its social contract several times, we've always been at their mercy anyway.

I want to renegotiate my social contract.

@greg Not to be rude, but I think you and your "esteemed colleages" are not particularly familiar with developing Twitter apps based on that comment.

Yes, the rate limit "shifted." IT SHIFTED DOWN.

Used to be 150 per hour un-authenticated (based on IP), 360 per hour authenticated (based on per-user oauth tokens).

Now the unauthenticated is still 150, but authenticated is down by 10 per hour to 350. Also they added feature-based rate limiting for certain API methods, which stack with overall rate limits.

As to your second point about being "at the mercy of page layout," do you think are any less at Twitter's mercy when using the API? How about the significant business risk of just being cut off altogether? What if they just shut down the API, period. Yes, I realize that (in theory) APIs are a contract

And what's so bad about having to rewrite the scraper occasionally? So some dev at Twitter spends 2 hours tweaking a feature, and I spend 10 minutes adjusting the scraper logic.

"An API is just a social contract that the company agrees to keep things backwards compatible for a long time."

I see your patronizing remark and raise you one: try developing a Twitter app, or even doing some cursory research on how Twitter treats third-party developers and we can continue the discussion then.

Look, this isn't meant to be a one-size-fits all solution that you implement once and it lasts forever.

But it's a feasible approach which will be more effective in the long run than using Twitter's shithead API. They don't understand what it means to be an API provider, and building out an app infrastructure based on the idea that Twitter will respect the social contract is foolhardy.

For what it's worth your technique is regularly employed by Googlebot too: http://onlinemarketingnews.org/just-how-smart-are-search-robots

I always wondered how the Fail Whale convinced the Twitter birds to carry it.

You are now HackerNews Famous!

The HackerNews folks make three really smart points:

1. If you don't distribute the PhantomJS calls, this behavior will be seen as a bot-like and blocked by Twitter.

2. Have to rewrite the headless scraper every time Twitter changes their page layout.

3. This solution does not access all the tweets in the past we'd like access to.

Hmmm...will I respect the new Twitter API Tos?

http://apijoy.tumblr.com/post/32627042250/hmm-will-i-respect-new-twitter-api-tos

When common sense is outlawed, only outlaws will have common sense.

Adam, those sound exactly like the kind of kneejerk objections that some HN dweeb would make just to disagree.

1. If you don't distribute the PhantomJS calls, this behavior will be seen as a bot-like and blocked by Twitter.

Trivial to distribute. And this is objection applies for any scraping approach. I know I said in the example that I'd do just a single browser, but we'd get a long ways just off of that one with our product. Still easier than using the API or replicating it with an underground firehose. Next.

2. Have to rewrite the headless scraper every time Twitter changes their page layout.

True of any scraper. Also true of our bookmarklet approach. Also true of using any API which is subject to change. Also true of computing in general. Any one change is not difficult to make, and in general it will be cheaper for me to make the logic change than it is for Twitter to tweak their DHTML. This is a particularly stupid point. Next.

3. This solution does not access all the tweets in the past we'd like access to.

Neither does the API. But at least via the web you can always browse a tweet permalink given a tweet (and see up to 5 replies). Beats the API. Any further objections?

Really, who cares what Hacker News assholes say?

These pro-Twitter API dickheads can continue using it, but they've been warned.

Our system's effectiveness and efficiency will speak for itself.

@the Joy of APIs

No, it probably won't! It definitely won't be compliant given how we use their data. That's the main downside of this approach.

However, if you feel that their API terms are abusive, this is a good way to make a stand while making your Twitter integration work better.

Hi Lucas, I wasn't being patronizing at all. I have developed apps and am familiar with the controversy.

If you have deployed software to 3rd parties, then if your scraper breaks, they blame you. If the API breaks, they blame twitter and are more willing to forgive.

This is why we have to present API neutrality as a best practice for suppliers and use APIs which are neutral for 3rd Parties, with no discrimination and no tiering

Perusing the HackerNews thread: http://news.ycombinator.com/item?id=4623259

Looks like you've answered all the major objections.

The big one is similar to what Greg said: it's a dance to keep up with every tweaks UI change Twitter makes.

Twitter does seem to be ever tweaking their UI like a child that can't figure out what it wants.

Here is what developers expect from an API and its supplier, and Twitter API is on the 3rd floor because of changing policy that may breaks your business!

http://api500.com/post/27366085761/developers-api-pyramid-of-needs-what-do-we-expect

@Adam: In practice, the ever-changing DOM problem is not that big of a deal. I've been able to maintain that logic in our own bookmarklet in a very cost-effective way.

This is not a compelling argument, to me. If you want Twitter data you have basically 3 choices:

- Use the API

- Traditional scraping

- Headless browser scraping

It sounds like these naysayers are partial to solution 1. Then they should go for it. They should just be aware that the rate limits are a practical constraint, and there is a serious risk of Twitter changing its policies or just shutting off the API altogether.

Solution 2 is not practical. Twitter is DHTML-heavy and loads content asynchronously. Server-side scraping won't work very well. If you do it in node you'll get the benefits of having a DOM/JS engine handy, but it's really not optimized for being a headless browser and you'll still want to use sessions/following at some point. Also, you get logged-out views when using a traditional crawler. This approach is also subject to the "ever-changing HTML problem."

With headless browsers you get sessions and native js/dom handling. As a DHTML-heavy site, Twitter is incentivized to plant plenty of handy hooks (classes and id's) into their DOM so that you don't have to rely on brittle XPATH techniques. If they tweak a feature, they're likely to keep the same hooks in place so as not to break peripheral logic in the course of restyling. If the changes or so extensive that they break existing JS logic, then they are probably going through another major re-design anyway (and probably a major policy reevaluation as well).

I'm not sure how many ways I can say this, but it's just not that big of a problem in practice. Sure, the API is a "backward compatible contract," but it's shitty and unreliable, and you're going to do significant post-processing of their data for all but the most trivial, most Twitter-inspired, derivative features. Tweaking JS scraper logic takes a small fraction of the time it takes a Twitter dev to tweak a feature.

BTW, that' show Encryptanet got to be the very first and only micropayments partner with PayPal, we were using a browser-based integration and PayPal couldn't shut it down, so they ended up deciding to partner with us.

@greg Sorry for overreacting.

Yes, scrapers will break, and there will be a certain amount of maintenance you must do to keep in lockstep with Twitter's DOM.

However, in practice I find that Twitter's OAuth is often down, and under those circumstances the app breaks and you have absolutely no control over it whatsoever. At least with a scraper you can do a quick fix and roll a patch to prod in a few minutes instead of waiting hours for FB/Twitter/LinkedIn to get its shit together and bring their OAuth servers back up.

Perhaps technical people are more forgiving when they can see it was the OAuth provider's fault, but I find that non-technical people often just say "YOUR LOGIN IS BROKEN LOL" or "Y U NO LET ME POST 2 TWITTER???".

I just feel that OAuth has not at all lived up to its promise of making third party app development easier and safer, particularly in Twitter's case. I'd go so far as to say that, overall, OAuth just makes the entire web a little more unreliable, and takes control away from app devs. I understand that it's still useful for delegating partial, revocable permissions to an app, but I've come to feel that it's just not reliable enough to build a business on top of. Not to mention the fact that it gives the big guys lots of control over how their users' data is used elsewhere, and the bigs have all consistently flexed these muscles to the detriment of product diversity & innovation on the web.

I'm firmly in the camp of ethical scraping, now. The OAuth API thing just isn't working out for me. It's too much of a time suck, and too much a risk on both the technology side and the business side.

Interesting case study about Encryptanet. I did not know that.

Greg, thanks for the Encryptanet story.

Lucas, I like the phrase ethical scraping... It's the same principle that made Googlebot successful.

I just re-read this and you're pretty much espousing the state of the art: http://onlinemarketingnews.org/just-how-smart-are-search-robots

I imagine ethical scraping is useful for other things like extracting the real URLs from shortened URLs or getting good content that is hidden by DOM tricks.

Sounds like you're inviting a lawsuit.

BTW, how do I sign in with my existing pandwhale username without using TW/FB/LI?

We still need to build our own authentication, Tim.

We were foolish in believing a web service in 2012 could exist just using OAuth.

Little did we realize that all the existing OAuth servers are unreliable.

So... it's on our "to do" list.

Using HTML for APIs is a wonkish approach disguised as pragmatism. No normal people should/will do this.

It's not an approach that everyone will use, because it is brittle.

But scraping has been around since the dawn of the Web.

Why Tweetbot for Mac is $20 -- it's because of Twitter rate limiting.

5:51 PM Oct 06 2012